Building software is complex. However, testing for regressions and making sure your application is stable and you can go to production with confidence should not be complex.

There are already tools to facilitate visual regression testing, like Behat, Wraith (which I used while I worked BBC), etc… However those tools require work to setup and maintain (sometimes a lot), and they won’t give you the complete assurance that ALL pages in your site are working as they were before. In other words, a full big picture of the status of your site or sites.

On a nutshell, those tools solve a different problem than the one GlitcherBot is trying to solve.

Wouldn’t it be great to have a tool that would scan all the pages in your site or application, and would flag you if any of them had changed after a deployment (or in your pre production environment)?

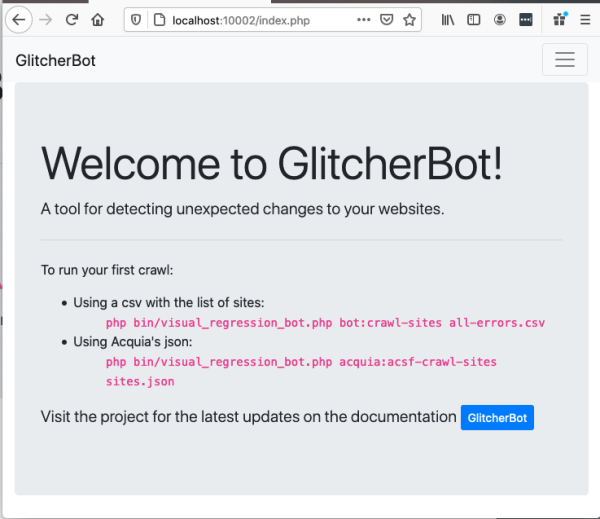

That’s the idea behind GlitcherBot. But don’t expect screen captures or any other complicated setups. To achieve its mission, GlitcherBot won’t look at how your pages look, but instead their html weight, their status and at some statistics of the html and tags being used at that particular moment.

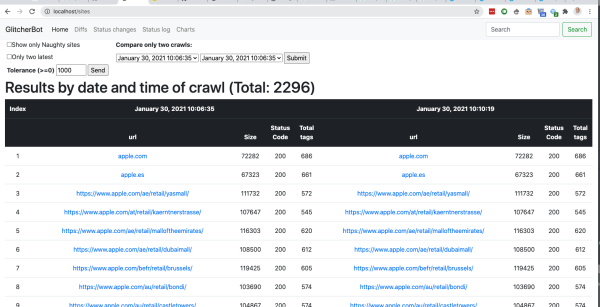

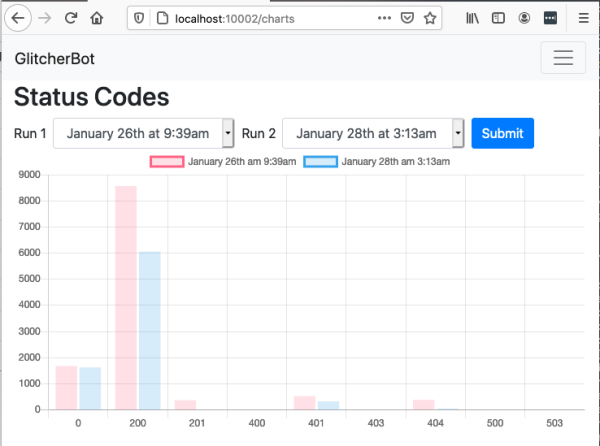

If those parameters change between two given crawls, say, before and after a deployment, GlitcherBot will detect it and will flag it to you. Sometimes changes are expected. Sometimes they will surprise you. GlitcherBot aims to flag those changes before anyone else notices them.

In other words, GlitcherBot gives you the power of data analysis to find any potential issues.